Hey Voice This! fam 👋🏼

We are living in a post-skill apocalypse. In this field, you could have taken PTO for a month last December and come back to an entirely new landscape of Conversational AI in the new year. And that’s exactly what happened to one of the authors of Voice This! Newsletter. Since coming back online in January, ChatGPT and LLMs have road rolled their way into the life of all modern professionals. You cannot get on LinkedIn today without hearing about the benefits of these conversational models.

Voice skills and actions are on their way out. We knew it was happening when Google announced it would no longer allow third-part voice experiences on their device ecosystem, but at the time, few of us knew exactly what they would get replaced with. Four months into 2023, I think we can officially say the role and responsibilities of a conversation designer are both unchanged and changing.

It all comes back to the question, “What do conversation designers actually do?” If your answer was, “They write chatbot responses,” then I’m afraid you didn’t actually understand the value of conversation designers.

It’s intentional that CxD contains “design” in the name (rather than the copious other title variations some companies might throw around like “Chatbot Writer” or “Conversational AI Specialist”) because conversation designers are what UX designers are for web and app interfaces: the champion of the user, the mediator between business needs and the needs of the target audience. We’ve seen conversation designers described as the person who “defines the interaction between humans and machines, by making strategic choices on what the machine says, how it says it, and when it’s said.” With the introduction of ChatGPT in the public eye, there has been a large push to automate the “what” (the content) of a conversational experience.

That’s all fine and good, but the role of a CxD is to strategically design a conversational experience. We know from linguistics that language is more than the words we use— language is a form of self-expression, and it can reveal a lot of who we are as people: our background, behaviors, and beliefs and values. By extension, a chatbot can also reveal what its brand (company) values and prioritizes.

“Research has also shown that people treat computers as social beings when the machines show even the slightest hint of humanness, such as the use of conversational language.” [source]

That’s why crafting these conversations is so tricky: what you put out in a conversational experience can a) be taken seriously by the user and b) always be tied back to the brand.

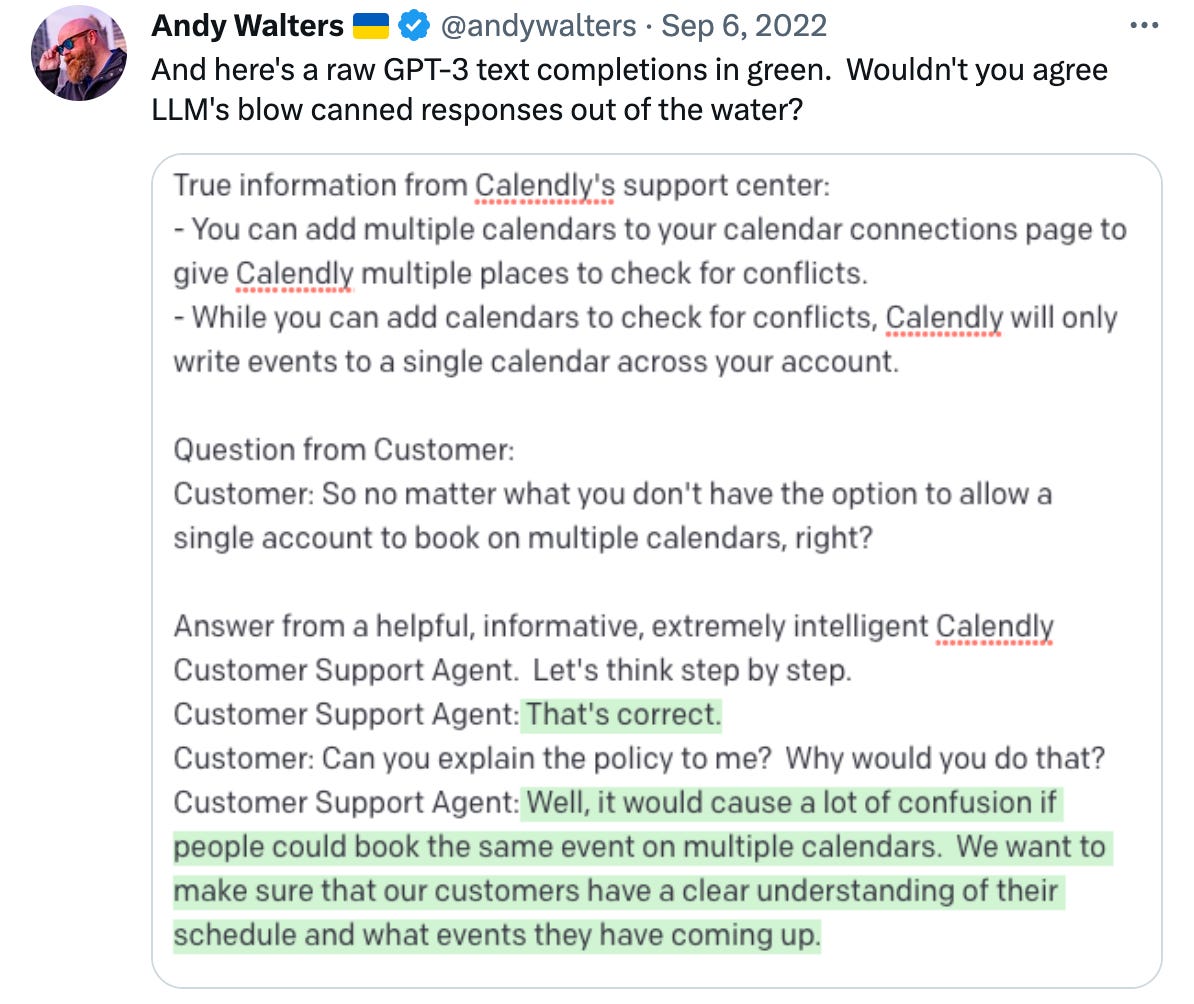

As conversation designers in the era of LLMs, it becomes our duty to become ethical designers. Our most important responsibility is to help create safeguards for these experiences. We need to work together with our teams to figure out which topics are off-limits, which topics need to be addressed (if attempted), and how to maintain user trust by generating reliable and true information.

This newsletter issue contains resources to help conversation designers get started down the path of working with LLMs, without the fear that their role might become obsolete in a few years.

Stay tuned for some very exciting developments with our podcast and newsletter! If you subscribe, you’ll be the first to find out all breaking news before the rest of the internet does.

Podcast Corner 🎙️

Voice This! Podcast season 1 ended! While we ramp up on season 2, we want to highlight other helpful podcast episodes.Design for Voice, episode with Brooke Hawkins

Quote from the episode:

Q: What does the scope of ethics really include?

Brooke: The scope of ethics for me is embedding the question of “what means good” for the people I’m designing for in the context of the service that I’m providing them.

The Voicebot Podcast, episode with Andrei Papancea

Quote from the episode:

Q: Why are LLMs better at intent identification than most NLUs today?

Andrei: The sheer amount of data these systems were trained on is ungodly large. They have concepts of brands and abstractions that humans use on a regular basis that you otherwise would have to specifically train a system on.

On guardrails:

Andrei: For us, it is very important whenever we integrate anything is that we give our customers control. Obviously, when it comes to the GPT-3s and ChatGPTs and any type of large language model-driven AI, those guardrails are quite important. I’ve been on a little bit of a speaking tour with some our largest customers over the past couple of weeks, walking them through what the integration is. Then also putting out the disclaimers and the yellow flags of “Hey, here are some controls you can put in place”… Also showing them that the open-endedness of [it] could be detrimental to one’s brand. So, use it cautiously.

Reading Pit Stop📚

Our favorite reads from the past few weeks, including some oldies but goodies. Happy reading!Where are LLMs useful?

“ChatGPT Lifts Business Professionals’ Productivity and Improves Work Quality”, research summary by Nielsen Norman Group.

“[W]hen using ChatGPT: much more time was saved in drafting than was expended on additional editing. Conversely, it’s possible that the additional time spent editing the final deliverable contributed to the higher rated quality of the AI-assisted documents.”

Ex-Apple engineer says Siri is way too clunky to ever be like ChatGPT

Empowering AI Trainers w/ LLMs, video interview with Willem Don

“The better the prompt, the better the output!” all about Prompt Engineering

Staff conversation designer, Rawan Abushaban has published now 2 articles on how to take advantage of ChatGPT to aid the CxD process:

How Useful are Educational Questions Generated by Large Language Models?

“AI can generate questions that are highly useful for teaching. The generated questions are relevant to the topic 97% of the time, grammatically correct 96% of the time, and answerable from the inputs used to generate them 92% of the time.”

LLMs: where should we have caution?

Andrei’s thoughts: Brands Beware - Hidden dangers within Large Language Models

For the sustainable designers out there: ChatGPT is consuming a staggering amount of water

“Artificial intelligence raises crucial and new questions, especially with regard to data protection.” AI: ensuring GDPR compliance

Audience Q&A 💌 with Kritika Yadav!

Finally, this is the part of our newsletter created by YOU! This issue we feature 2 questions from our listeners.Once again, we’ve brought in an expert to help answer our listener questions. Meet Kritika Yadav: senior conversation design BOSS at Flipkart, ex-Haptik and ex-Samsung Bixby. We’re super lucky to have her guest star in our newsletter!

First question:

What tools do you use for work and how do you hand off your designs?

Kritika: Based on my previous experience, I have primarily utilized in-house tools for my daily design needs in order to facilitate seamless integration with the backend. However, nothing beats a good old flowchart tool such as Lucidchart or Diagrams.net. These tools enable me to focus solely on my design thinking process without worrying about any technical obstacles.

Throughout the design process, I provide detailed notes for the development team to ensure a clear understanding of what is required. Here’s an example of the same:

When the time comes to hand off my design to the development team, these flow charts are incredibly useful in highlighting critical aspects. Additionally, I create a High Level Design document that is aligned with the Product Requirement Document (PRD) published by the product manager. This document provides a concise summary of what we aim to build and the value that an efficient design will add to the end user experience. This can range from supporting a new intent to increasing discoverability or reducing agent transfer. Each idea is conveyed with sample conversations, serving as a reference point for all stakeholders including engineers, data scientists, and other designers, ensuring everyone is aligned with the product vision.

Despite taking an exhaustive approach to the design process, it is crucial to have weekly sync ups with the engineers to stay up to date on their progress. This proactive approach helps identify any gaps early on, allowing them to be addressed before reaching the testing phase.

—

Great answer! 👏🏼 Now for the 2nd question:

What are some assumptions you designed under that didn't work once the project was deployed?

Kritika: During my early years as a Conversation Designer, I assumed users would understand what my chatbot was intended for and would restrict their queries to the use case. However, I soon realized that this assumption did not hold true, especially when creating chatbots for e-commerce clients.

For example, I designed a chatbot for post-order queries and made it clear in the bot's introduction, but customers often had requests beyond the bot's scope, such as "Can you help me purchase a new chair?" or "Does the ToughPly table come with a warranty?". While these requests were not within the bot's intended scope, I still needed to provide a strong fallback response (not just something that would say “I didn’t understand that”) that would redirect the customer and help them understand the bot's capabilities better. These fallbacks are essential for improving the user experience and guiding customers towards the bot's intended use in a graceful manner.

To avoid making false assumptions, I remind myself to separate my role as a conversation designer from the technical aspects of the backend. When working closely with engineers and data scientists, it's easy to become too focused on how the system functions and unintentionally assume that users will understand how to communicate with the chatbot to get the desired outcome.

For example, when designing a chatbot for a pizza restaurant, one might assume that users will provide all the necessary details for their order, such as toppings, base, and quantity, in a single message (because those are all the entities I need to complete my order intent), but that’s rarely the case. It's important to take a step back from the technical details and review the design from the perspective of someone who just wants to order a pizza. This approach helps to ensure that the design is grounded in pure conversation.

Though sometimes the best you can do is assume certain aspects of the designs, it's essential to assess user feedback and behavior post-deployment to further iterate and enhance the design.

Thanks for Reading This! 🥰

Voice This! Newsletter is a joint effort of Millani Jayasingkam and Elaine Anzaldo. Opinions expressed are solely our own and do not express the views or opinions of our employers.